by John Thackeray

Risk Management – The Transformation

introduction

Never before in the age of risk management has so much been asked by so many by so few. Risk Management is going through a change management transformation, the likes of which have never been seen before. The key drivers for this change include a persistent volatile environment, a deep longing to be considered a good social citizen, endless regulation, the growth of non-financial risk types, new methods of customer engagement and a need to address past mistakes. The change is being exacerbated by the new operating environment (working from home), which has been enforced by COVID-19, focusing risk management to think differently both in terms of architecture, people, processes, systems and value.

This paper looks at the key drivers and the implications that it poses and suggests a meaningful pathway for the future of risk management by means of change transformation.

drivers

The current operating environment in which firms find themselves is anything but benign. COVID-19 has deepened structural fissures within an already existing fragile ecosystem. Negative interest rates, increased compliance costs, zombie loans, the continuing levying of fines for anti-money laundering and corruption have eaten into income and capital. Moreover, the persistence of scandals which are highlighted every week by social media have evaporated any good will towards financial institutions. Many financial institutions have been seen as facilitators of tax avoidance and enablers of financial crimes. The reputation of many is such that customer expectations, sentiment, and engagement are low, with very little confidence in both the products and the messaging of the organizations. Simply put, the financial organizations seem to many of their stakeholders to have lost their way, with no moral compass to lead them, leaving behind a bankrupt and obscure identity.

Having shot themselves in the foot, retribution has come in the form of heavy regulation partly due to past sins but also as an appeaser towards public opinion. The regulators now have the ready-made excuse to appear in the bowels of financial institutions, dictate terms, with an ever-increasing bright spotlight. This oversight extends and reaches on a global basis with regulation that can be retrospective, leading to unspecified fines for past mishaps from multiple agencies and countries.

Given the 2008 financial crisis, there is no longer an appetite to shore up financial institutions and indeed there is an intolerance towards any protest from the firms on the growing depth and breadth of new legislation which has dictated. This legislation has led to more detailed and demanding capital, leverage, liquidity, and funding requirements, data privacy as well as higher standards for risk reporting, such as BCBS 239. The financial guard rails have seen stiffened with more detail and requirements in the US banking system with regards to ‘CCAR” (Comprehensive Capital Adequacy Review) and by European Union guidelines with regards to stress testing, both bodies now seemingly dictating capital and dividend policy.

The growing of non-financial risk i.e. types cyber, model, climate and conduct has had a dramatic effect on financial institutions and their operations. Each risk now has entered the Enterprise Risk Management portfolio and needs to be addressed with urgency. Model Risk has increased with data availability and advances in computing, modelling, and the need to address in quick order pressing legislation such as “CECL” ‘(Current Expected Credit Losses)”. Climate Risk has maintained its ascendancy as an emerging risk with the Bank of England leading the way both in terms of supervision and legislation. Operational resilience has gained a foothold boosted by COVID-19 with a resultant knock on to reputational risk. Conduct risk has escalated as scandals highlighted by social media question the ethics of firms on how far they will go to boost their profits. All these pressing risks by themselves have sequestered an inordinate amount of energy and cost both in terms of mitigating and reporting.

implications

These drivers will have huge implications on the effectiveness and adequacy of business systems and operations. Technology or the increased reliance on it will be seen as a panacea, the gatekeeper that can both thwart the risks and increase the opportunities posed by these drivers. The increased use of technology continues to transform the normal processes and channels of engagement/experience and accentuate the social distancing relationship. Big Data, Machine learning and Artificial Intelligence championed by the burgeoning ranks of the FINTECH are the go-to components to mitigate the effect of the drivers by means of reimagining business processes.

As regulations become more complex and the consequences of noncompliance ever more severe, financial institutions will likely have no choice but to eliminate human interventions to hardwire the right behaviors and standards into their operations, systems, and processes. There will be a need for new algorithms to parse the data, which will need to be reviewed and challenged on a constant basis. Where these interventions cannot be automated, robust surveillance and monitoring will be increasingly critical.

Increased costs have led to an ever-increasing reliance on automation, both in decision making and processes. The amount of big data being generated will enable the more astute to redesign their processes using a comprehensive data management set of both public and private data sets. Processes such as underwriting will be digitalized, information submitted need only be scanned and verified without any in person engagement.

Artificial and machine learning will be used in behavioral analysis and remove a lot of the expert judgement required by risk officers, therefore eradicating any biases within the decision-making process.

Advances in technology will also help in the key areas of stress testing and scenario planning, especially in evaluation of climate risk within the portfolio. This advancement will lead to the multi-dimensional understanding of risks with complex models that need to be adjusted. While existing scenario analysis or stress testing frameworks can be leveraged, climate risk scenario analysis differs from the traditional use of these with longer time horizons, description of physical variables and generally the non-inclusion of specific economic parameters. These idiosyncrasies mean that data and climate scientists and engineers will need to be absorbed within the existing risk management structure. Moreover, stress testing and scenario planning will also have to incorporate operational sustainability and resilience which may call for significant contributions from external third parties to help complete the analysis and evaluation.

changes transformation

The Target Operating Model of Risk Management of the future will be very different, with the risk professionals armed with a new set of technology tools and new skillsets. In order for it be an enabler, the organization needs risk to transform its vision and redefine its role structurally given that many risk professionals will now need to work from a home environment. The main strategy will involve a heavy reliance and incorporation of new technology to both right size and reimagine risk management practices.

Listed below are some suggestions, which no doubt can be modified depending on the size and complexity of the organization.

• Risk management will be seen as foremost Firm Culture Champions and then Risk Culture Champions. Building and maintaining these identical and symbiotic cultures will be critical to ensuring the success of both the enterprise and risk function of the future. The combination of these cultures is likely to be a requisite element in a firm’s future competitive advantage. The secret recipe is to start with the risk culture first and then distribute and evangelize, so that both cultures will include a vision that will include the advocation of a strong corporate value. In order for this to take root, the firm will need to monitor and survey on a regular basis the action of its employees, no doubt enhanced by technology.

• The Chief Risk Officer (“CRO”) will be seen as a Champion of the firm and will be one of the stronger internal candidates to succeed the CEO. He/she will have to become an exceptional narrator who, armed with data, can convey and articulate the message of today. The brave new normal will call for greater transparency around disclosures concerning IT/Supplier disruptions, Operational resilience, Cyber-attacks, Sustainability, Climate change. The CRO must be able to engage in the conversation with the right message and be the voice piece of the firm backed by the data.

• The risk stripes will have to be reorganized structurally around correlated risk stripe clusters e.g. Fraud, Operations, Technology, IT Security, Compliance, Human Resources, Model, Conduct, and Reputation Anti Money Laundering will all come within the same coordinated structure and governance rather than standalone silos. The synergies will result in smaller teams of agile multi discipline staff with a depth and breadth of knowledge in one or more of these subject areas.

• The Risk Personnel with be multi trained in data analytics as a starting point and have the ability to match this with practical experience in all risk stripes. The tour of duty will include cross training in the various risk disciplines which will enable the team to speak a common language while applying consistent standards. Risk professionals will be expected to wear many hats, expectations high on delivery and communication skills.

• The risk management ecosystem will demand a comprehensive enterprise wide data base which is expected to help financial institutions create a repository for all types of structured and unstructured data. Since risk functions in the future are expected to become increasingly data driven, the supporting data infrastructure is a critical enabler. This data will have many uses and create a data driven analytical risk area which will need to be resourced by staff with multiple skill sets. Understanding the data will improve overall quality, aggregation capabilities, and risk reporting timeliness thus affording the management information systems to be displayed in a means that offers the users, a great deal of information in real time, improving the quality and timeliness of fact-based decisions.

passing thoughts

Broader responsibilities, better trained, smaller, multi risk disciplined, data hungry, these will be the new requisite qualities of risk personnel. Change will happen. The question is – are you willing to embrace the change or not. The firm that thinks ahead with this mind set will be the one left standing not only with a competitive advantage but also with an enhanced reputation.

Procedures are written primarily to reduce the inherent risk by documenting in writing the business process or activity. Effective procedures are an insight and window into the control, governance and oversight of the organization.

In order for procedures to be effective, they should have the following traits.

- Consistent data points which are easily understood and communicated.

- Use the active voice when writing procedures: It’s more direct and leaves less room for interpretation.

- Explains to readers “why” the procedure is necessary in a way a new hire can understand how this procedure helps the company achieve its objectives.

The focus of this paper is on the primary trait, data points. These set the standard and expectations which enables procedures to be written in a consistent and repeatable format. Moreover, common data points can ensure the proper enforcement of policy by reinforcing the guidelines and standards prescribed. This paper articulates a menu of data points which must be considered in the appreciation and application of this objective.

Below is a table of data points followed by explanations of each data point. **High/Medium/Low refers to scale in relation to admission to the procedures.

Data points explained.

1.Inherent Risk

Inherent risk is an assessed level of the natural level of risk inherent in a process or activity without doing anything to reduce the likelihood or mitigate the severity of a mishap, or the amount of risk before the application of the risk reduction effects of control. Usually categorized into High, Medium, Low. This categorization importantly focuses the organization in both understanding and addressing those processes which represent the greatest risks to the organization, thus, enabling the proper allocation of resources to mitigate these risks.

2.Objective/Purpose and Scope

The purpose is the reason why the business exists, why you exist or why the team does what it does. The objective is the what it needs to do to achieve its goals. Scope of an activity, project or procedure represents the limitations or defines the boundaries of its application. These data points set the stage for the document and allow the reader to appreciate the significance of the process or processes.

3. Owner

Quintessentially the most important player within the process. Each owner has a unique responsibility and accountability to ensuring that the procedures are effective. It is a measurement of management skills and application and a true testament of both standards, leadership and behavior. It is the responsibility of the owner to clearly communicate and train those involved within the process. Given that the most effective control is that of segregation of duties, the Owner can never be the Approver.

4. Approver

Another implicit control is that of Authorizations. which ensures that the Approver is always one level above that of the Owner in the Organizational Hierarchy.

5.Roles and Responsibilities

According to research by the Harvard Business Review, clearly defining people’s roles and responsibilities matters more when determining a team’s success than outlining the precise path the team will take. In other words, team members perform better when they know exactly what they will be responsible for versus having a specific set of predefined steps to complete.

6. Key Controls

Key controls are the procedures organizations put into place to contain internal risks. Key controls are identified because:

- They will reduce or eliminate some type of risk.

- They are regularly tested or audited for effectiveness.

- They protect some area of the business.

- They can expose a potential area of failure.

7. Escalation/Exceptions/Remediation/Overrides

Every process will from time to time require exceptions, overrides which will require a clear and transparent, escalation and remediation process. This process must be formalized, and records kept to document both the decision-making process and the approval authority. The process speaks to governance and oversight as well as giving an indication of whether the procedures require revisions or amendments.

8.Training and Communication

This is perhaps the most overlooked data point, but it is important part of the efficacy of the procedures. Lack of both or little evidence of these data points being demonstrated, implies a lack of ownership involvement.

9. New Procedures, 10. Legal/Regulatory 11. Updates, Revisions and Amendments

All these data points are crucial in ensuring the currency and relevancy of the procedures. The owner is again responsible for the compliance of each of these data points. Given that these data points are either ad hoc or determined on an annual basis, the materiality of these points have a lower ranking.

12. Business Continuity

A business continuity plan refers to an organization’s system of procedures to restore critical business functions in the event of unplanned disaster. These disasters could include natural disasters, security breaches, service outages, or other potential threats. Usually in most procedures, there is a line item as to the plans and preparations.

13. Data Storage/Integrity/Governance/Management

Data is often said to be an organization greatest asset and as such policy and standards are dictated at the enterprise level. Given the risks and the regulations surrounding data misuse, this is a vulnerability that needs to be addressed upfront. Great care is needed to ensure that any enterprise standards are being complied and adhered to and that personnel are cognizant of such standards. Again, this is a data point whose compliance is an insight into how enterprise directives are being executed.

14. KPI’S, KRI’S

While Key Risk Indicators (KRIs) are used to indicate potential risks, Key Performance Indicators (KPIs) measure performance. At times, they represent key ratios that management can track as indicators of evolving risks, and potential opportunities, which signal the need for action. These measures are normally found in more mature processes.

Conclusion

In order for an organization to achieve consistent and repeatable procedures, it must first determine what data points are required and what data points are achievable. This paper has provided a menu which is not inexhaustible, but which requires considerable thought with regard to the appropriate data points. Much will depend on the organizations objectives and whether they wish to have a set of free-standing procedures or procedures which are more aligned to a consistent look and feel.

A consistent look and feel with consistent data points makes the procedures more auditable and compliant with policy and standards.

In his article,7 Key Elements of Effective Enterprise Risk Management, John Thackeray describes how a well structured ERM system allows an organization to navigate, with some certainty, the risks posed to its business objectives and strategy. Without useful documentation and steps to broadly communicate the elements, the best planned ERM system will fail. In this article John describes what it takes to document your ERM system.

Efficacy of Risk Documents

Good written risk documentation is both an art and a science; in the perfect world blending the writer and subject matter expert as one. Unfortunately, we do not live in a perfect world and this blend is difficult to find. Too many risk documents have either been badly written by the subject matter expert and or have been deemed content light and aspirational by the writer.

To achieve clarity, the risk documentation should be written from an independent viewpoint by someone who can challenge known assumptions with a questioning mind. The risk writer will need input from the business, seek collaboration and guide the organization towards ownership of the final document. As a result, the document will be an objective piece of writing, speaking the language of the organization while being understood by the outside world.

Good documentation is a prerequisite in the successful implementation of risk management, acting as a delivery and message mechanism. Documentation must:

- deliver a consistent message,

- speak a common language,

- have clear objectives allied to the maintenance of the organization’s objectives

, - be easy to review, evaluateand update frequently.

The documentation affects and defines the engagement with internal and external stakeholders, articulating and defining the organization’s culture, attitude, and commitment towards risk.

3 SIGNALS OF EFFECTIVENESS

The board has overall responsibility for ensuring that risks are managed. They delegate the operation of the risk management framework to the management team. One of the key requirements of the board is to gain assurance that risk management processes are working effectively and that key risks are being managed to an acceptable level. Therefore, the board requires a comfort and assurance level that risk documentation is being used and isdirecting the organization toward achieving its objectives.

Here are three signals of effectiveness.

1. Cultural attitude towards risk: This establishes and confirms clear roles and responsibilities that reinforce ownership, accountability and responsibility. Documentation underpins standard practices and policies, so a commitment to the guidelines speaks to the adequacy of a firm’s internal control environment.Most companies will have a risk charter which binds the Board and senior management to a fiduciary duty of their responsibilities. It will impose a structure and governance affording a value add which directs the performance of corporate objectives in a controlled fashion.

Part of this cultural attitude towards risk is evidenced in the Review and Challenge. Asking the right questions and verifying the correct answers demonstrate an organization’s comfort level with its governance and documentation processes. There must be a structure in place that allows employees to challenge these processes, when necessary. For instance,with 360 degree feedback or employee lunches with the C suite. Both enable open communication and transparency.

Moreover, this will be evidenced through training. A commitment to training will speak volumes about the tone set from the top of the organization. Indeed, reinforcement through regular training will drive the corporate message home, ensuring a commonality of standards and purpose.

2. The right metrics. Metrics gauge the operational efficiency of documentation and selecting the right ones will ensure that employees are compliant in terms of key performance and key risk indicators. Too few or too many of these metrics can paint a distorted picture; the chosen metrics must therefore be material and relevant to the documentation. Regular reviews of these metrics will indicate whether the documentation is fit for purpose.Return on Equity, Risk adjusted capital return, return on investment are some metrics that can be adjusted for with regard to risk.

3. Continuous assessment and review of policies and procedures. Reviews should consist of assessments based on representative samples and must include testing and validation by all engaged stakeholders. Documentation needs to be recalibrated if your organization has too many – or too few – “escalation incidents.” and or exceptions. These exceptions and escalation would be actively tracked to gain an understanding of the validity of the documents.With limited resources only core and material documents would have to be reviewed and tested especially in the light of changing working conditions and impactful legislation . A structure which enforces this oversight is a sign that risk mitigation is part of the organization’s DNA.

Passing thoughts

These three signals are interlinked, each providing a layer of evidence that risk is being taken seriously by the organization.

Risk Documentation is where the written word captures the spoken word: documenting the ERM systems ensures intentions and actions are aligned – which makes for a better world.

This article was published on CFO.University

Fraud risk management should both inform and shape any third-party risk management program in conjunction with all the other risk disciplines. Now more than ever, with increased regulation and risk, organizations must conduct vigorous, structured and regular due diligence on third-party intermediaries. The risks posed by these parties are many and varied, ranging from cybersecurity to business disaster. With third parties accessing regulated company information, the likelihood and impact of IT security incidents are on the rise.

Regulators are looking for the methodology, the approach and the sustainability of programs designed to capture and mitigate these risks. Moreover, regulators are seeking evidence on how a program and its processes are embedded and aligned within an organization’s risk culture and risk appetite.

Possessing a robust, structured program to mitigate these risks can protect corporate reputation and shield executives, board members and other management from personal and professional liability. At its core, such a program incorporates a risk-based approach, which is a methodical and systematic process of knowing the company’s business, identifying its risks and implementing measures that mitigate those risks.

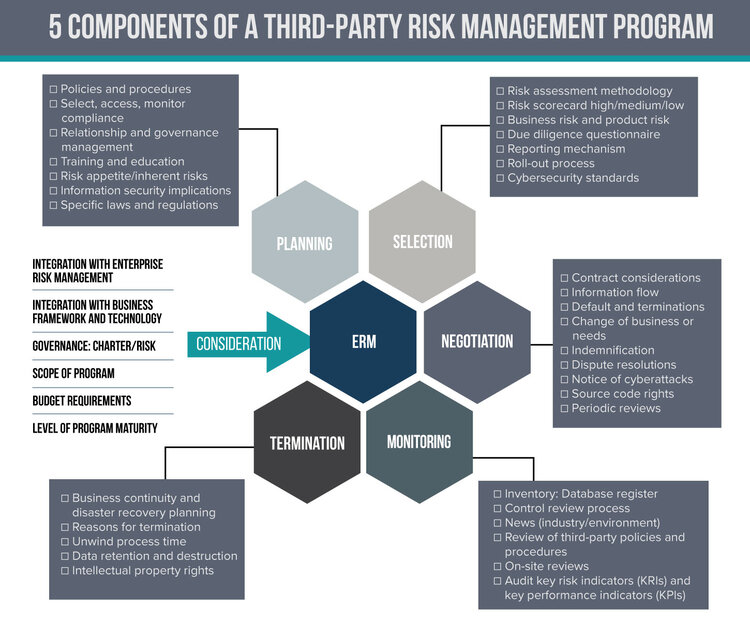

The diagram below portrays the key considerations which are explained further below.

Planning

Each third-party relationship brings with it several multidimensional risks that extend and traverse across suppliers, vendors, contractors, service providers and other parties. An effective third-party risk management process begins by comprehensively identifying third-party risks. This risk identification process should be followed by an analysis of the specific drivers that increase third-party risk. Moreover, your organization needs to understand its universe of vendors and how the third-party ecosystem engages, interacts and connects with its internal and external operating environment.

With an understanding of its risk appetite for vendor risk, a risk framework can be developed with a coherent and consistent set of policies and procedures which define the paradigm of anobjective risk assessment model, crucial in creating a risk profile for third parties. The policies and procedures will, furthermore, describe the implementation of the system, resources, acceptable mitigants, roles and responsibilities.

Selection

Your organization should take a risk-based approach to third-party screening and due diligence. Stratify your third parties into various risk categories based on the product or service, as well as the third-party’s location, countries of operation and key contributions. An important part of the process will be to mitigate an over-reliance on any key third party.

Negotiation

Standardized contracts are a must, outlining the rights and responsibilities of all parties, with suitable metrics in place to sustain the relationship. Given the importance of supply chains today, the contract should identify any subcontracting to a fourth party. The key is to contractually bind third parties to inform and get approvals on any fourth-party involvement and ensure that fourth parties are in the scope of screening and risk management processes. Understanding the business continuity process and the compliance requirements of the third party are also important considerations in the selection process.

Monitoring

Monitoring is essential as it will ensure that performance standards set by the program are being implemented and followed with the imposition of well-defined metrics to measure the effectiveness of the program. Continuous third-party monitoring and screening is the key to helping companies make informed decisions about their third parties, with screening against global sanctions lists, law enforcement, watchlists and adverse media reports.

Termination

The termination process is often overlooked, but it’s so crucial in the negotiation. It should take what-if scenarios into account, with various trigger points that allow your organization to extricate itself from the relationship in an orderly and timely fashion.

Third-party risk management is one of the top emerging risks, and fraud risk management needs a seat at the risk table to both impact and inform the program but more importantly keep it relevant with regard to outside influences. Fraud risk management can no longer be a silent partner when it comes to third-party risk management.

This article was published on ACFE Insights

If a company wants to minimize the effects of risk on its capital and earnings, reputation and shareholder value, it must implement a comprehensive enterprise risk management (ERM) program. A successful ERM framework not only aligns a firm’s people, processes and infrastructure but also yields a benchmark for risk/reward and aids in risk visibility for operational activities.

Ultimately, ERM should provide a firm with a competitive advantage – but what factors should be evaluated as one goes about developing it? Here are seven key components that must be considered:

1. Business Objectives and Strategy

Risk management must function in the context of business strategy, and the first step in this integration is for the organization to determine its goals and objectives. Typical organizational strategic objectives include market share, earnings stability/growth, investor returns, regulatory standing and capital conservation.

From there, an institution can assess the risk implied in its strategy implementation and determine the level of risk it is willing to assume in executing that strategy. The firm’s internal risk capacity, existing risk profile, vision, mission and capability are among the factors that must be considered when making this determination.

All strategies are predicated on assumptions (beware of those that are unspoken and unverified) and calculations that may or may not be accurate; the role of ERM is to challenge these assumptions and, moreover, to execute the strategy. ERM and strategic management are not two separate things. Rather, they are two wheels of a bicycle that must be built uniformly to contribute to the stability of the whole.

2. Risk Appetite

Risk direction is defined by the risk appetite, which in turn is defined as “the amount of risk (volatility of expected results) an organization is willing to accept in pursuit of a desired financial performance (returns).”

A risk appetite statement is the critical link that combines strategy setting, business plans, capital and risk. It reflects the entity’s risk management philosophy and influences the culture and operating style. A firm’s existing risk profile, risk capacity, risk tolerances and attitudes toward risk are among the considerations that must be taken into account when developing the risk appetite.

The risk appetite statement should be developed by management (with board review) and must be translated into a written form. The overall risk appetite is communicated through a broad risk statement, but should also be expressed, individually, for each of the firm’s different categories of risk.

An effective risk appetite statement needs should be precise, so that it cannot only be communicated and operationalized but also aid in decision making. More importantly, it needs to be broken down into specific operating metrics that can be monitored.

Once the risk appetite is set, it needs to be embedded, and then continuously monitored and revised. As strategies and objectives change, the risk appetite must also evolve.

3. Culture, Governance and Taxonomy

The risk appetite statement should be conveyed through culture, governance and taxonomy. These three factors help an organization manage and oversee its risk-taking activities.

A strong risk culture – set from the top and augmented by comprehensively defined roles and responsibilities, with clear escalation protocols – is a must for successful ERM implementation. Strong, well-thought-out risk management principals, combined with ownership and culture training, help promote, reinforce and maintain an effective risk culture. Evidence of this strong risk culture can be seen in open communication, both in conflict resolution and top-down/bottoms-up decision making.

Operating and support areas, from the perspectives of engagement, training and support, must be included in a healthy ERM program. In fact, with tone from the top, these areas can become partners and even owners with the ability to manages outcomes, ensuring transparency and accountability.

Good ERM is about understanding change and managing that change within the overall mandate – rather than in isolation. Intertwined with this change is a need for a risk taxonomy, which can help better identify and assess the impact of the risks undertaken.

4. Risk Data and Delivery

It’s all about the data – more specifically, collecting, aggregating and distributing the correct data. Risk data and delivery must be robust and to scale, so that the information collected, integrated and analyzed can be translated into cohesive, credible narratives and reports.

5. Internal Controls

The internal control environment helps senior management reduce the level of inherent risk to an acceptable level, known as residual risk. Undoubtedly, it is one of the most important tools in the risk manager’s toolbox.

Residual risk is the level of inherent risks reduced by internal controls. An effective control environment must encourage and allow for a consistent structure that is balanced and realistic, within the context of a company’s internal workings.

6. Measurement and Evaluation

Measurement and evaluation determine which risks are significant, both individually and collectively, as well as where to invest time, energy and effort in response to these risks. Various risk management techniques and tools should be used to measure and quantify the risks, on both aggregate and portfolio levels.

To meet the requirements of different stakeholders and oversight/governance bodies, all risks, responses and controls must be effectively communicated and reported. The oversight/governance bodies are tasked with ensuring that a firm’s risk profile aligns with its business and capital plans.

7. Scenario Planning and Stress Testing

Given that management must address known and unknown risks, tools like scenario planning and stress testing are used to help shed light on these missing risks and, more importantly, the interconnection of these risks. Armed with this information, the organization can develop contingency plans to model these risks and to at least counter their effects on future operational viability.

Parting Thoughts

ERM is not a passing fad. Indeed, it is now instrumental to the survival of an organization.

It allows an organization to navigate, with some certainty, the risks posed to its business objectives and strategy. In short, ERM is good business practice.

This article was published on Global Association of Risk Professionals

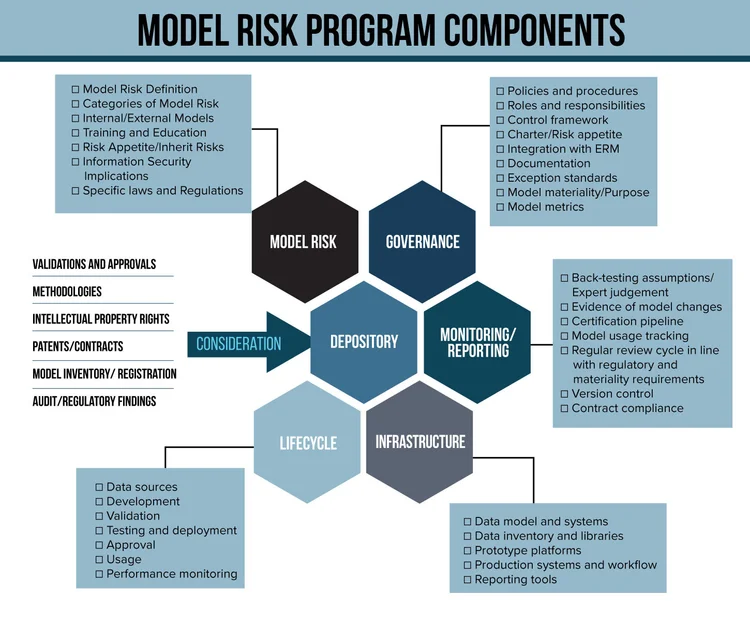

Models are all around us — integral and important to operational efficiency — but the risks that they sometimes pose can materially impact the financial well-being of even the most well-structured organizations. In order to understand the risks, we must first define what a model is and what the inherent risks are when operating a model.

A model refers to a quantitative method, system or approach that applies statistical, economic, financial or mathematical techniques and assumptions to process data into quantitative estimates. In other words, it’s a methodical way to process and sort data. A model consists of three components:

- An input

- A processing element

- An output

To build on that idea, model risk is the potential for the misuse of models to adversely impact an organization. Model risk primarily occurs for three reasons: a) data, operational or implementation errors; b) prediction errors; and c) incorrect or inappropriate usage of model results.

Models exist and assist in the identification, assessment and evaluation measurement. It can also assist in monitoring both nonfinancial risks like behavioral analysis and financial risk like credit risk.

It therefore makes sense to warehouse these different models under a central Model Risk program. Such a holistic program can offer a consistent approach in addressing the challenges outlined below.

Challenges of a model risk program

Model risk programs can have the following inherent challenges:

- A lack of appreciation and understanding of the total number of models (inventory) being utilized by the organization

- Lack of a valid method to update the model inventory on a regular basis

- Inability to maintain the independence of the model validation department

- A model validation process that fails to demonstrate effective challenge, review and independence

- Lack of suitable data used in the model development process to support review/validation

- Lack of developmental evidence to substantiate model assumptions

- Lack of an explanation to support the application of expert judgment and model overrides

- Lack of validation or failure to re-evaluate at regular intervals

- Failure to maintain comprehensive and up-to-date model documentation

What to include in your model risk program framework

In order to address these challenges, I would recommend incorporating the following nine model risk documentation components that enable the framing of your model risk program.

9 risk documents that help frame the model risk program

- A clear and consistent organizational narrative documented in writing covering principles, objectives, scope, model risk program design (including standards) model risk appetite, model risk taxonomy, controls and industry regulations.

- A policy describing the oversight and governance which will include the roles and responsibilities of all stakeholders and participants.

- Well-thought-out and actionable model risk management policies and procedures to include:a. Data management policyb. Model validation policy and requirementsc. Model documentation requirements for both in-house developed models and third-party vendor models.

- Policy and procedures to include the completeness of the current model inventory and process of updating on an ongoing basis.

- Policy and procedures which will risk rate the model’s materiality to the function of the organization.

- A model development and implementation policy which incorporates the following considerations:a. Integration into new products b. Planning for model updates and changesc. Planning for additional uses of existing models

- A model validation policy which incorporates the following considerations:a. Evaluation of conceptual soundness, methodology, parameter estimation, expert and other qualitative datab. Assessment of data inputs and qualityc. Validation of model outcomesd. Assessment of ongoing monitoring metrics and performancee. Model risk scoring

- A documented model issue management and escalation process which will describe the issues, cataloguing of issues, issue remediation and action plans.

- Disaster and contingency planning policy for approved models describing the Plan B in case of model failure, corruption or cyberattack.

The payoff of so much documentation

Model risk is very real, and I’ll be honest — it requires a heavy lift in documentation. But by covering your bases, the documentation will provide a consistent set of standards, which articulate guiding principles that cover the model process and provide comprehensive guidance for practice and standards on an enterprise-wide level.

This article was published on ACFE Insights

OPERATIONAL RESILIENCE IS defined as the ability of firms, industries, and sectors as a whole to prevent, respond to, recover, and learn from operational disruption. It is a set of techniques that allows people, processes, and informational systems to alter operations in the face of changing business conditions.

Enterprises that are operationally resilient have the organizational competencies to ramp up or slow down operations in a way that provides a competitive edge and enables quick and local process modification.

A resilient enterprise is able to recover its key business services from a significant unplanned disruption, protecting its customers, shareholders, and reputation—and, ultimately, the integrity of the financial system. But enterprise operational resilience is about more than just protecting the resilience of systems; it also covers governance, strategy, business services, information security, change management, run processes, and disaster recovery. Avoiding disruption to a particular system that supports a business service contributes to operational resilience.

Thus, operational resilience is an outcome. Operational risk, meanwhile, is a risk—which, if not properly controlled, threatens operational resilience. Therefore, in order to achieve operational resilience a firm must first manage operational risk effectively.

The Operating Environment and the Influence of Regulators

The operating environment for financial firms has changed significantly in recent years, with many adverse and material events becoming a near certainty. Regulators now want operational resilience to be a process that boards and senior managers are directly engaged with and responsible for through governance and assurance models.

Regulators are promoting the principles that foster effective resilience programs and their benefits for firms, customers, and markets. In July 2018, the U.K.’s financial services regulators—the Bank of England, the Prudential Regulation Authority, and the Financial Conduct Authority— brought the concept of operational resilience into the limelight with the publication of a joint discussion paper, “Building the UK Financial Sector’s Operational Resilience.”

The key requirements noted in the discussion paper include the following:

- Governance: The paper emphasized the role of the boardroom in operational resilience. Accountabilities and responsibilities for senior management need to be clearly defined and set against an unambiguous chain of command.

- Business operating model: The model must be properly understood, including key business services and the people, systems, processes, and third parties that support them, with accountabilities agreed to.

- Risk appetite and tolerances: Organizations need to understand and clearly articulate their operational risk appetite and impact tolerance for disruptions to key business services through the lenses of impacts to markets, consumers, and business viability.

- Planning and communications: Organizations need to have meaningful plans that are tested not only by the organizations themselves but in partnership with their contributing stakeholders.

- Culture: There must be a shift in mindset toward service continuity and a continuous improvement approach. That can be achieved by embedding a “resilience culture” that reinforces and promotes resilient behaviors.

What It All Means

The Bank of England and other central banks are likely to be more interested in system-wide scenarios of disruption and common vulnerabilities (for example, firms relying on third parties), while individual firms will often focus on and test firm-specific scenarios.

Central banks may wish to test whether firms collectively have adequate resources to deal with a severe operational disruption and whether firms may be undertaking their contingency planning without the availability of common resources.

This is especially relevant in the payments system and may require a common sharing of payment capability if a firm’s systems were to be compromised. The idea of sharing a competitor’s payment platform may seem absurd, but the need to ensure for the greater good may outweigh an individual firm’s vested interests.

The Bank of England’s approach is built around two key concepts: impact tolerances and business services.

Impact tolerance is defined as a firm’s tolerance for disruption in the form of a specific outcome or metric. Crucially, tolerance is built on the assumption that disruption will occur and that the tolerance remains the same irrespective of the precise nature of the shock. The tolerance is causeagnostic. So, rather than concentrating risk mitigation solely on minimizing the probability of a disruptive event, impact tolerance focuses the board and senior management on minimizing the impact of a disruption. Impact tolerance thus provides a focus for response, recovery, and contingency planning alongside traditional operational risk management.

Impact tolerance is then linked to a business service. Doing so provides a clear focus for firms’ efforts to enhance their operational resilience, which may include, for example, plans to upgrade IT systems, business continuity exercises, and communication plans. Importantly, the focus is on business services—not IT systems.

What Will Your Institution’s Approach Be?

Firms should be taking six critical actions to support and evolve their approach to operational resilience:

- Identify critical services: This is the discovery phase. The enterprise should begin by documenting its business services and mapping them to the underlying technology (cloud infrastructure, data centers, applications, etc.) and business processes (disaster recovery, cyberincident response plans, etc.).

- Understand impact tolerance: In this phase, the underlying technologies and processes are then assessed against key performance indicators or key risk indicators. This assessment is used to create a risk score for each business service, which is then reviewed against agreed-to impact tolerances. Through the use of scenarios, firms need to estimate the extent of disruption to a business service that could be tolerated. Scenarios should be severe but plausible and assume that a failure of a system or process has occurred. Firms must then decide their tolerance for disruption—that is, the point at which disruption is no longer tolerable.

- Know your environment: Using the assessment, the firm develops a remediation plan that gives priority to the business services with the largest disparity between risk score and acceptable impact tolerance. Having been communicated to the regulators and aligned with their expectations, the remediation plan is then funded and executed, and the business service is reassessed for resilience. This should incorporate third parties, which are the second-largest root cause of operational outages after missteps in change management.

- Operationalize the program: The operational resilience program must be able to evolve with the business. Firms should understand which external or internal factors could change over time and which trends could impact the key business services identified, then adjust their resilience plans accordingly. An important step in the process is testing, which is also prioritized by the risk materiality of key business services. Tests such as the simulation of disruption events can advance the enterprise from having informed assessments to demonstrating capabilities to stakeholders and regulators.

- Robust and coherent reporting: For boards and senior management, risk metrics and reporting provide an important insight into the effectiveness of the operational resilience program. Having a robust communication policy and strategy that uses all forms of media and engages with all stakeholders is essential to any resilience program.

- Collaboration: Firms should work together, pool resources, and share information—in short, develop noncompetitive solutions to a shared threat—to the extent possible.

Conclusion

Operational resilience is essentially an upgrade that moves operational risk management from passive to active. Operational risk management, once the poor sibling of credit and market risk management, has stepped into the limelight because its importance can no longer be overlooked. That being the case, it needs upscaling and upgrades of both resources and vision to bring ORM programs to a more resilient state. Given the number of pressing regulatory programs, firms must weave operational resilience into their infrastructure and mindset.

This article was published on The RMA Journal

OPERATIONAL RISK PROGRAM DESIGN INFLUENCES

A picture is worth a thousand words and the chart below depicts the core influences of an operational risk program in my view. (The second illustration depicts RMA’s Operational Risk Framework.) Operational risk is defined by the Basel Committee on Banking Supervision as “the risk of loss resulting from inadequate or failed business processes, people and systems or from external events.” Operational risks relate to areas such as cyber and fraud, crime prevention, human resources management, information technology, information security (including digital and multimedia), business continuity management, physical security, and vendor management.

An operational risk program design can be embedded in both financial and non-financial organizations and needs to be suited to fit the culture and objectives of the specific organization. The benefits of a program are multiple:

a) Understanding the key risks and application of relevant applicable mitigants and controls.

b) Reducing the complexity in operations by understanding the key processes.

c) Inserting key performance indicators, thus ensuring more effective processing.

d) Improving resource preparation and allocation for future planning.

e) It speaks to the internal controls of an organization.

OPERATIONAL PROGRAM INFLUENCES

ENTERPRISE RISK MANAGEMENT

For an operational risk program to be successful, it must be fully integrated with the strategy and culture of the organization, otherwise it will have no bearing and credibility. It must be scalable regardless of the size, scale, and complexity of the organization to have influence. The program must be managed at the enterprise level and will have a policy and procedures document which will outline the risk appetite, scope, and governance of the program. The policy and procedures document will incorporate many of the influences below depending on the size and maturity of the program.

NEW ACTIVITIES

Operational risk arises in two areas: business as usual and new product/new activities conducted by the organization. Each of these areas will be influenced by regulatory and industry considerations. New products and activities require an added level of scrutiny, since these involve forecasted risks that have not yet manifested themselves and as such warrant an extra level of governance, usually managed by a committee. Moreover, these new activities will drive changes to the required framework in terms of key risk indicator (KRI) and key performance indicator (KPI) adjustments, new risk control self-assessment (RCSA) processes identified, and new scenarios considered.

COMMON INTEGRATED TOOLS

Definition, consistency, and standardization of both tools, documents, and language are needed for a successful implementation. The tools will include: a) risk taxonomy (describes the risk, the event, and affect); b) definition of inherent risk (no controls), and residual risk (with controls); c) an operational control library (describes the types of controls); d) scorecards; and e) rating scales for inherent risks and control effectiveness. Common metrics such as KPIs and KRIs need to be aligned in a manner that drives areas of focus and ensures planned control assessments. Finally, a standard organizational specific RCSA will manage and evaluate the key processes and document the effectiveness, adequacy, and application of controls.

OPERATIONAL RISK DATA COLLECTION & ANALYSIS

The standard RCSA should be able to be decomposed, allowing the contents to be inputted into a central registry. Remediation and action plans flowing from the RCSAs should show ownership and a timescale of when these plans will be executed and finalized. Supplementing the data derived from the RCSA will be incident reports, audit reports, and compliance reports. Internal loss data needs to be captured in this central registry as well, providing a basis for operational risk management and mitigation strategies. Collection of this diverse data is important, as the information contained will aid in understanding the effectiveness of the controls and the ability to predict patterns and trends which warrant further investigation.

SCENARIO ANALYSIS

A model which incorporates stress and scenario analysis will enable the organization to gain foresight and to evaluate the different types of responses needed under different operating environments. Note that this will be associated with a more mature program, as it will require a rich level and history of data points together with advanced modelling skills.

CONTROL ENVIRONMENT

A control framework is a data structure that organizes and categorizes an organization’s internal controls, which are practices and procedures established to create business value and minimize risk. The framework will outline the key processes and activities, key documentation requirements, methodology assessments, governance (roles and responsibilities), and escalation and monitoring/ reporting responsibilities. Continuous education and training will play a major part in the program in embedding and maintaining this control environment, and will be the key factor in successful and effective implementation

REPORTING

The most important influence will be the reporting aspect and the different requirements of audiences both internal and external that need to be both informed and addressed. The information supplied should include meaningful metrics that show both trend, materiality, and control effectiveness. The reporting will also need to cascade down and filter up with governance decisions documented and actioned. Reporting will further include a catalogue of material incident reporting, an evaluation by audit or a third party on the effectiveness of the program, and a pronouncement as to the quality control and assurance of the program.

PASSING THOUGHTS

The internal control structure of any organization is under constant threat with the advent of cyber risk and the explosion of social media. Operational risks are expanding and emerging with the constant deployment of new and rapid technology. An operational risk program—small or large, immature or mature—is a must have. Without it, the organization can quickly lose both credibility and reputation. Examples include Volkswagen, GM, and Toyota. The implementation is not difficult, but it does require vision, application, and documentation to ensure effectiveness.

This article was published on The Risk Management Association

In the volatile and unpredictable realm of financial services, risk documentation must be current, viable, operational and, most importantly, effective. Exactly how, you might ask, can this be achieved? That’s a very good question.

The success of risk documentation depends, in part, on the size and operating environment of each individual firm, as well as on the time, energy and cost that each firm allocates to this cause. But there are seven key risk documentation guidelines (which I like to call the Magnificent Seven) that every firm can follow:

- Establish roles and responsibilities. Relevant stakeholders must be identified and assigned tasks.

- Choose and implement the right metrics. Metrics gauge the operational efficiency of documentation, and selecting the right ones will ensure that employees are compliant in terms of key performance and key risk indicators. Too few or too many of these metrics can paint a distorted picture; the chosen metrics must therefore be material and relevant to the documentation. Regular reviews of these metrics will indicate whether the documentation is fit for purpose.

- Build a structured process that allows for challenges. Asking the right questions and verifying the correct answers demonstrate an organization’s comfort level with its governance and documentation processes. There must be a structure in place that allows employees to challenge these processes, when necessary.

- Emphasize data integrity. Good documentation should always be written with a view toward output. An understanding of how the data is collected and delivered will give a clear indication about how the documentation fits within the operating environment. The true test is a meaningful output.

- Enforce policies and procedures. Attestation, by itself, is not enough. Reviews should consist of assessments based on representative samples and must include testing and validation by all engaged stakeholders.

- Develop exception and escalation protocols. Documentation needs to be recalibrated if your organization has too many – or too few – “escalation incidents.”

- Prioritize training. A commitment to training will speak volumes about the tone set from the top of the organization. Indeed, reinforcement through regular training will drive the corporate message home, ensuring a commonality of standards and purpose.

Documentation underpins standard practices and policies, so a commitment to the guidelines speaks to the adequacy of a firm’s internal control environment.

Parting Thoughts

The proof of the pudding is in the eating, but to some it might seem like the pudding has been overbaked. The “Magnificent Seven” require effort and engagement from all parties concerned. A simple attestation is a non-starter, because it’s an easy option that can mask both ignorance and accountability.

In the long run, since effective risk documentation protects both the economic standing and reputation of an organization, the effort required to follow these guidelines is well worth it.

This article was published on Global Association of Risk Professionals